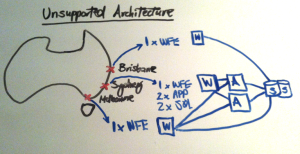

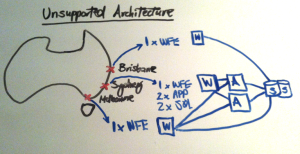

In discussions with IT Pro’s at client sites, a few times I have seen them start off designing their farm to handle performance requirements for interstate users (e.g. Brisbane, Sydney, Melbourne) by having the core of the farm in Sydney, and then one web front end in Brisbane and another in Melbourne. Essentially an architecture that looks like this:

What’s the challenge here?

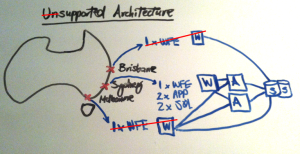

The challenge is that technically it won’t be supported by Microsoft, because what has essentially been created here is a stretched farm, that has a packet latency of > 1ms between the WFEs (W), App Servers (A) and SQL Servers (S). So why isn’t an environment like this supported? Because it will cause performance problems, as all the internal farm servers need to communicate with one another quickly. To get an idea for how significant the performance will be degraded, the typical statistic quoted is 50% per 1ms delay, ouch!

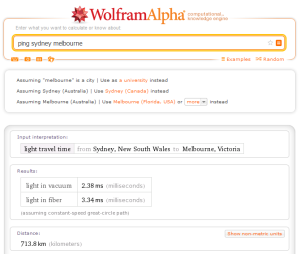

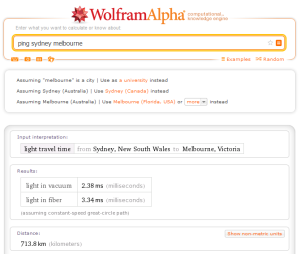

Also, occasionally I have heard the statement that, yes, it is possible to ping Sydney to Melbourne in < 1ms. Well, with the help of Physics 101 we can prove that this cannot be the case. Enter Wolfram Alpha to save us some time – let’s check how long it would take for a beam of light to travel from Sydney to Melbourne (just in one direction, not bouncing back again):

2.38ms. How about light being sent through fibre? 3.34ms. What does this mean? In the absolute optimal case, it would take at least 3.34ms for data to be sent from Sydney to Melbourne. But not really – because there is of course routing overhead and network congestion. And this is why an interstate stretched farm such as this cannot be supported by Microsoft.

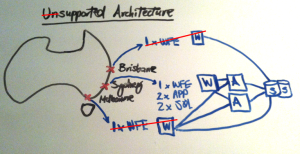

So how do we fix the supportability issue?

To get the farm back into a usable (and supported state) we basically need to drop the idea of the web front end in Brisbane and Melbourne. Then all requests for users in Brisbane and Melbourne are routed through Sydney.

The other solution here, if you really must stretch the farm across data centres (usually for cheap(er) and simple(r) Disaster Recovery) is to ensure that the data centres are in the same city – e.g. Sydney CBD to Mascot. Note that this doesn’t address the original concern though – improving performance for interstate users.

How do we improve the performance for interstate users in a publishing (e.g. intranet / public website) scenario?

If you’re having performance issues where users in Brisbane and Melbourne are performing heavy reads of content and few writes – e.g. in an intranet scenario, then you’ll want to ensure that you are using SharePoint Publishing Cache aggressively. This will give you a dramatic performance boost because SharePoint won’t be fetching data out of SQL constantly and then trying to render it. Users will just get a straight HTML dump of pages.

How do we improve the performance for interstate users in a collaboration scenario?

The most popular solution employed here is to use Wan Optimization (WanOp) devices such as those made by RiverBed and SilverPeak. These devices have the ability to not only cache data/content, at each branch (i.e. Brisbane and Melbourne) but also perform compression and de-duplication techniques to minimize the number of bytes actually sent to the client. Note that these capabilities are required other than just simple caching of the data, because in a collaboration scenario, the content is typically changing regularly.

Of course, from Windows 7 and Windows 8 client machines also have Microsoft BranchCache built-in which provides similar capabilities to the WanOp devices, though it does have limitations (e.g. it only works with Windows devices). Here are some further details on BranchCache:

- http://technet.microsoft.com/en-au/network/dd425028.aspx

- http://www.enterprisenetworkingplanet.com/windows/article.php/3896131/Simplify-Windows-WAN-Optimization-With-BranchCache.htm

Of course, the overall number of servers and specifications needs to be determined during the SharePoint infrastructure design process (e.g. in the above diagram for a reasonably sized office it would be wise to add at least one more WFE for performance and high availability), however hopefully I’ve at least shown you one critical design mistake to avoid.